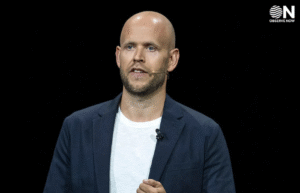

DeepMind CEO Highlights AI’s Struggle With Simple Math Despite Complex Achievements

Demis Hassabis, the CEO of DeepMind, has drawn attention to an intriguing paradox in the capabilities of advanced artificial intelligence systems. While state-of-the-art models like Google DeepMind’s Gemini have demonstrated exceptional proficiency in solving high-level mathematics problems, even performing at the level of medalists in prestigious competitions such as the International Mathematical Olympiad (IMO), they still stumble on simpler, high-school–level math questions. This contrast, Hassabis argues, reveals critical shortcomings in the reasoning and generalization abilities of current AI systems, despite their apparent intelligence.

Hassabis explained that large AI models trained on vast datasets can sometimes display remarkable problem-solving ability in complex, abstract domains. When given advanced proofs or multi-step theoretical challenges, these models can chain together logical steps, deploy relevant theorems, and even generate novel solution approaches. In benchmark testing, Gemini and similar models have impressed researchers by producing results that would require years of specialized training for humans to achieve. However, the same systems can produce surprisingly wrong answers when confronted with more basic tasks—such as simple algebraic manipulations, straightforward geometry, or basic number theory questions commonly taught in high school.

This inconsistency is not just an amusing quirk; it raises serious concerns about AI reliability. In real-world applications, the inability to consistently handle simpler problems can undermine trust in AI decision-making, particularly in education, engineering, finance, and scientific research. Hassabis noted that these failures often arise because advanced AI models rely heavily on pattern recognition from their training data rather than genuine mathematical reasoning. While they can mimic the steps of complex problem-solving when similar examples exist in their dataset, they may struggle when asked to apply foundational principles in unfamiliar or slightly altered contexts.

The paradox also underscores the limitations of current AI training paradigms. Models like Gemini are built on massive neural networks with billions of parameters, trained on diverse internet-scale datasets. While this enables them to excel at pattern-rich challenges, it does not guarantee true conceptual understanding. Human mathematicians, in contrast, develop intuition and adaptive reasoning skills that allow them to solve both simple and complex problems with consistency. For AI, bridging that gap will require more than just scaling up data and computing power—it may involve fundamentally new architectures and training approaches that better emulate human reasoning.

Hassabis suggested that improving AI’s mathematical generalization could have transformative effects across industries. Reliable problem-solving across all complexity levels would enhance AI-assisted scientific discovery, accelerate engineering innovation, and revolutionize personalized education tools. DeepMind researchers are already experimenting with targeted training strategies, such as curriculum learning, where models are taught concepts progressively from simple to complex, mirroring human education systems. They are also exploring hybrid approaches that combine symbolic reasoning engines with deep learning models, potentially allowing AI to “think” more like a mathematician.

The AI math paradox serves as a reminder that intelligence is not defined solely by the ability to solve difficult problems. True intelligence, in both humans and machines, lies in mastering the fundamentals as well as the advanced. Hassabis believes closing this gap is one of the next major frontiers for AI research—and solving it could push the boundaries of what artificial intelligence can achieve.