OpenAI and Google Forge Unlikely Cloud Alliance Amid AI Compute Crunch

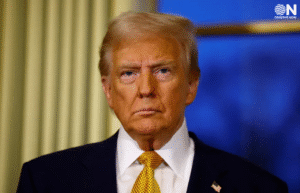

In a striking twist in the intensifying AI landscape, OpenAI and Google have reached a strategic agreement allowing OpenAI to tap into Google Cloud’s infrastructure for expanded computing capacity. Despite being fierce rivals in the generative AI space—with OpenAI’s ChatGPT and Google’s Gemini battling for dominance—both companies appear to be aligning resources to address a shared challenge: surging demand for large-scale AI compute.

According to people familiar with the matter, the deal was finalized in May 2025 after several months of negotiations. Under the agreement, Google will supply OpenAI with additional high-performance computers—complementing existing infrastructure provided by Microsoft Azure and the so-called “Stargate” initiative with Oracle and SoftBank. As one source put it, the primary catalyst for the deal is the ever-growing need for GPU and TPU resources to train and run advanced models like GPT-4 and soon GPT-5.

The collaboration marks a significant departure from OpenAI’s previous cloud strategy. Since 2019, Microsoft held exclusive cloud-provider status, hosting OpenAI’s massive model-training workloads. However, earlier this year, Microsoft opened up the framework, granting Right of First Refusal—clearing the way for OpenAI to diversify its compute sources .

From Google’s perspective, hosting OpenAI presents both strategic and financial opportunity. Google Cloud, which generated roughly $43 billion in 2024 revenue, is actively promoting its Tensor Processing Units (TPUs) and positioning itself as a neutral yet AI-optimized infrastructure provider. As market analysts note, this opens new avenues beyond internal AI tooling and historic partnerships, drawing in major clients like Apple, Anthropic, and now, even OpenAI.

Financial markets reacted swiftly. Alphabet’s shares rose about 2 percent following news of the agreement, while Microsoft’s stock saw a modest dip—around 0.6 percent—on shifting investor expectations .

Observers say the deal reflects how AI monopolizes one key resource: compute. Experts at Scotiabank described the partnership as “somewhat surprising” but applauded it as a clear signal of big players prioritizing infrastructure provisioning over turf wars. It underscores a broader industry trend, as startups and AI labs increasingly spread workloads across multiple cloud vendors to secure greater scalability and resilience.

That said, technical and strategic challenges lie ahead. Google’s own cloud unit has faced capacity pressures—Alphabet CFO Anat Ashkenazi acknowledged that demand already outstrips available supply. Deploying capacity for OpenAI might require careful balance, potentially affecting internal projects like DeepMind and Gemini, along with other Google Cloud customers.

Meanwhile, OpenAI isn’t resting on this partnership. It continues efforts via options like its Stargate data center program, a mammoth $500 billion-capacity initiative involving Oracle and SoftBank, and its $11.9 billion CoreWeave cloud agreement. Coupled with its ambition to develop in-house AI chips to reduce reliance on external GPU vendors, OpenAI is clearly pursuing a diversified, long-term strategy.

As the AI arms race escalates, this cooperative move underlines the paradox at the heart of the industry: fierce competition can coexist with cooperative utility, especially when compute capacity is a bottleneck.

By tapping Google Cloud, OpenAI safeguards against over-reliance on a single provider, while Google leverages the collaboration to boost its cloud credentials. Both stand to gain—computationally and commercially—even as they vie for AI supremacy.